About the report

The Health Foundation is an independent charity committed to bringing about better health and health care for people in the UK.

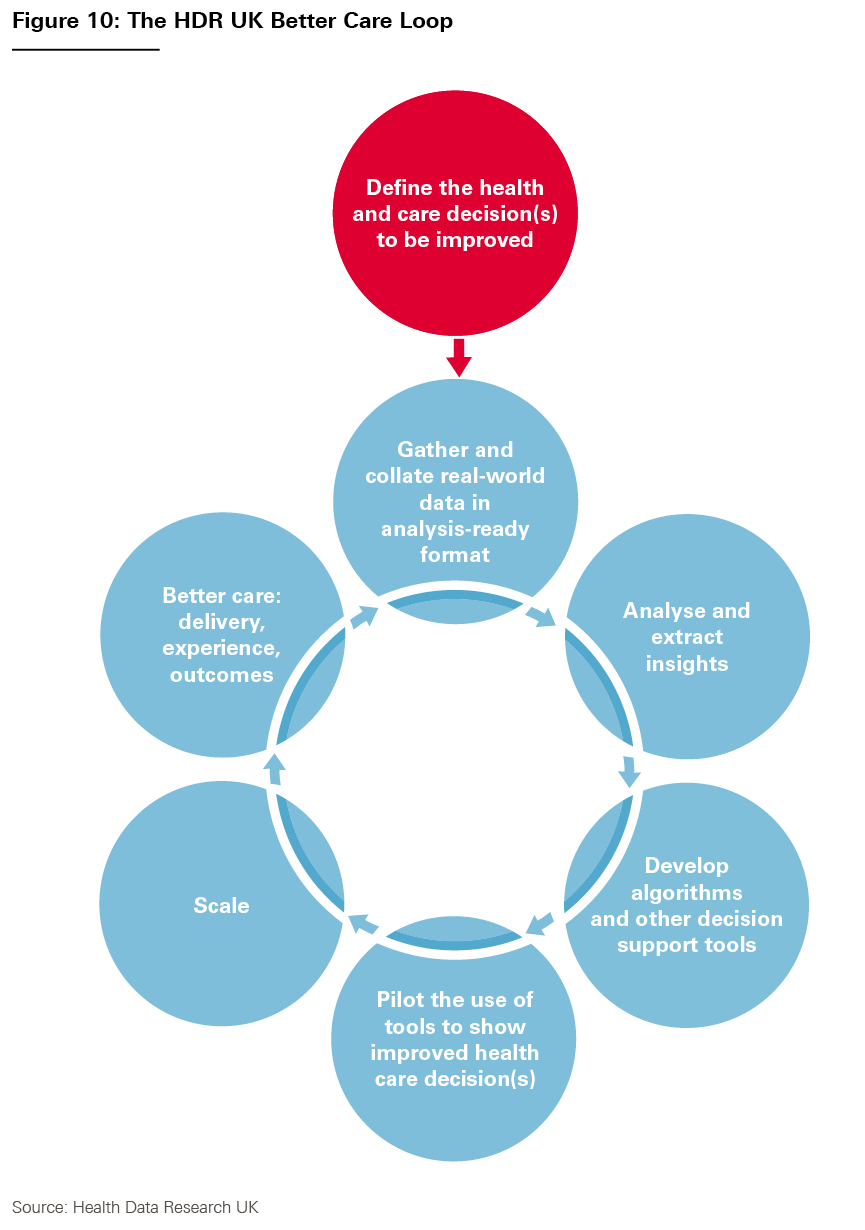

Health Data Research UK (HDR UK) is the UK’s national institute for health data science. HDR UK’s mission is to bring together the UK’s health and care data to enable discoveries that improve people’s lives by uniting, improving and using data as one national institute.

HDR UK’s Better Care programme aims to equip clinicians and patients in the UK with the best possible data-based information to make decisions about their care. Over the past 2 years, as part of this wider programme, the Health Foundation and HDR UK have been working in partnership to deliver the Better Care Catalyst programme. This has funded three projects to develop data-driven tools that aim to improve health care decision making and also supported three workstreams to set out the training, knowledge mobilisation and policy actions required to support data-driven learning and improvement in health care.

This report is the final output of the Better Care Catalyst programme’s policy and insights workstream, which researched the barriers and enablers for implementing learning health system approaches in the UK. It supports the wider Better Care programme and community by providing analysis and advice to further the use of data to improve health care services. It also identifies a range of opportunities and actions that policymakers and organisational and system leaders can take to advance the learning health systems agenda across the UK.

Key points and summary of recommendations

- A learning health system (LHS) is a team, provider or group of providers that, working with a community of stakeholders, has developed the ability to learn from the routine care it delivers and improve as a result – and, crucially, to do so as part of business as usual. Done right, LHSs are not a separate agenda, but about embedding improvement into the process of delivering health care.

- An LHS is a way of describing a systematic approach to iterative, data-driven improvement (regardless of whether those involved label it as an LHS). Learning and improvement are already happening in most providers and, in many cases, LHS approaches will offer a way to pull this existing work together in a more systematic way and organise it more effectively. In this sense, some see LHSs as the next stage in the evolution of traditional quality improvement approaches.

- Tackling the huge pressures that health and care services are currently under will require action on multiple fronts, notably recruiting more staff and increasing investment in services. Amid all these pressures, we should be wary about seeing LHSs as a ‘nice to have’. A step change in the health service’s learning and improvement capability is precisely what is required if it is to find a sustainable way out of the current crisis and effectively reshape care to meet future health needs.

- On the one hand, helping teams and providers become LHSs gives them the tools to diagnose and solve problems and to drive improvement from within – turning them into ‘engines of innovation and improvement’. Over the long term, this potentially offers a powerful and more sustainable route to improving quality and efficiency than simply relying on national programmes or external consultancy.

- On the other hand, LHSs can also be thought of as sophisticated ‘implementation mechanisms’, providing the infrastructure for teams and providers to effectively adapt, embed and refine ideas and innovations from elsewhere. By supporting teams and providers to adopt solutions and implement national change programmes effectively, LHSs can play an important role in helping to deliver national priorities for recovery and service transformation.

- While LHSs require technical capability to analyse data and implement improvements, they are also deeply social – requiring networks of people, collaboration and a conducive culture. Investing in getting this ‘human infrastructure’ right is just as important as the technical side. Ultimately, it is the ability of LHSs to bring people together to ask questions, interpret data, reconcile differing views and make decisions that allows them to successfully effect change in a complex, adaptive system such as health care.

- Our research – informed by a literature review, interviews, a survey of more than 100 expert stakeholders and a series of practical case studies – suggests there is a large gap between the promise and practice of LHSs. This is partly due to the lack of a clear definition, vision and evidence base around LHSs, meaning it can be difficult to know where to start or how to make progress.

- This report aims to demystify the concept of LHSs and explores four important areas especially relevant to LHSs where action can lead to tangible progress: learning from data, harnessing technology, nurturing learning communities and implementing improvements to services. Each of these areas is important in its own right, with much to be gained by making progress on each one individually. Indeed, for those wanting to create LHSs, the first step will often be to develop one or two components. But it is ultimately by bringing these components together into a full LHS that they can become more than the sum of their parts.

- To help facilitate LHS approaches and realise their benefits, policymakers and organisational and system leaders will need to make progress on a range of related policy agendas, such as data, digital maturity and interoperability, and improvement capability and culture. They will also need to develop a clear vision and narrative around LHSs. This report highlights eight key areas for action – summarised in Box 1 – to help overcome the challenges involved.

Box 1: Eight priority areas for action

This report highlights eight areas where action by policymakers (government ministers, civil servants and national leaders) and organisational and system leaders (those in leadership roles in providers and local and regional health care systems) could support the development of LHSs, as shown in Table 1. Further details can be found in Chapter 3.

Table 1: Eight priority areas for action and recommendations for each

|

Area for action |

Recommendations |

|

|

For policymakers |

1. Clear narrative |

|

|

2. Digital maturity |

|

|

|

3. Data analytical expertise |

|

|

|

4. System interoperability |

|

|

|

5. Implementation and improvement capability |

|

|

|

For organisational and system leaders |

6. Learning culture |

|

|

7. Front-line implementation capability |

|

|

|

8. Organisational improvement capability |

|

Introduction

The health and care system in the UK is facing some of the most significant challenges in its history. Services are finding themselves under huge pressure – a result of both the COVID-19 pandemic and a period of significant underinvestment. At the same time, the need to reshape care to better meet future health needs is becoming more urgent. Enabling services to recover and rising to meet these challenges will require the NHS to use every tool available.

Learning health systems (LHSs) offer one possible way to help services recover and improve, even within the current challenging circumstances. Rather than simply relying on ‘top-down’ national policy interventions alone, they harness the power of providers to drive improvement from within – and, moreover, to do so as part of ‘business as usual’. As such, LHSs can be powerful vehicles for improving services and population health. They may be particularly important for achieving successful service transformation over the next few years. For example, NHS England’s Transformation Directorate recently indicated that it sees LHS approaches as important for realising the benefits of new technologies in the NHS. The significant service innovations that were implemented rapidly after the onset of the COVID-19 pandemic show what can be achieved when people come together around a common ambition, when we maximise the use of data and when staff are supported to deliver change.

In many ways, health care has always been a form of ‘learning system’. But recent advances in data and technology, coupled with the move towards better collaboration and integration between services, are now presenting new opportunities to learn and improve in a more systematic way. Since the term ‘learning healthcare system’ was coined by the Institute of Medicine in the US in 2007, interest in the LHS agenda has been growing rapidly.

Despite this growing interest, most providers and systems have not yet been able to capitalise on the potential of LHSs. There are several reasons for this gap between promise and practice. There are many different conceptions of LHSs, as well as a lack of a robust, practical evidence base. This makes it difficult to forge consensus on what an LHS is and the benefits it can bring. There are a range of practical challenges with creating LHSs: the capabilities they require, such as data analytics and quality improvement skills, need nurturing in their own right, and attempts to do so often run up against wider structural, policy or resource barriers. At the same time, it can be hard for policymakers and organisational and system leaders to know how best to support this agenda.

The report

This report, part of Health Data Research UK’s (HDR UK’s) Better Care programme, aims to tackle these questions head-on. We seek to demystify LHSs and contribute to a clearer narrative and vision about the role LHS approaches can play in improving health and care. We also seek to identify the most pressing challenges, explore several important areas where targeted action by policymakers and organisational and system leaders could lead to tangible progress, and support policymakers and practitioners as they consider the next steps.

For this project we carried out desk research and conducted interviews with expert stakeholders. To further investigate the opportunities and challenges for LHSs, we conducted a purposive online survey of 109 expert stakeholders between December 2021 and January 2022, the results of which are described throughout this report. Our respondents represented a range of expertise from across the UK, both in LHSs and in the key areas of LHS activity, such as collecting and analysing data, engaging patients and the public, and quality improvement. We also explored existing examples of LHSs, with detailed investigation of 16 case studies. In addition, we drew together learning from the Health Foundation’s programmes and research across areas such as quality improvement, technology and data analytics, as well as our experience of supporting networks such as the Q community and the NHS-R community. We also drew on learning from HDR UK’s Better Care programme.

Content overview

Chapter 1

Chapter 1 looks at what LHSs are and the different types in existence, as well as highlighting the common activities and assets underpinning them all. It explores the growing interest in LHSs in the UK and considers why they might be particularly relevant over the coming years.

This chapter will be useful for those interested in the concept of LHSs and those who would like to understand how LHS approaches can address key health and care challenges and drive improvement.

Chapter 2

Chapter 2 considers four key areas that are particularly important for LHSs: learning from data, harnessing technology, nurturing learning communities and implementing improvements to services. For each, it details the key opportunities and challenges for LHSs, drawing on our survey evidence to identify which challenges are the most pressing.

This chapter will be useful for those wishing to understand in more detail the different aspects of LHSs and some current challenges for developing them. Some readers may already be familiar with the debates in particular sections (as many of the complexities facing LHSs are reflective of broader challenges) and so may wish to explore the sections with which they are less familiar.

Chapter 3

Chapter 3 explores key priorities for developing LHS approaches, as identified by our survey respondents. It also offers recommendations for how these priorities could be realised.

This chapter will be useful for policymakers and organisational and system leaders to understand what practical actions they can take to support the development of LHSs. It may also be useful for practitioners who wish to understand the broad spectrum of actions that can be taken to support LHS approaches.

Case studies

Throughout this report, we draw on 16 case studies to exemplify LHS approaches that are already being applied across the UK (see Box 2 for a summary).

Box 2: Case studies

We present 16 case studies in this report. As indicated in Table 2, some of the case studies are presented as examples of full LHSs, while others concentrate on one of the four areas discussed in Chapter 2.

Table 2: The 16 case studies and what they focus on

|

No |

Case study |

Used to illustrate… |

|

1 |

Flow Coaching Academy |

Full LHS |

|

2 |

PINCER – a pharmacist-led intervention to reduce medication errors |

Full LHS |

|

3 |

CFHealthHub – a digital learning health system |

Full LHS |

|

4 |

Nightingale bedside learning coordinator |

Full LHS |

|

5 |

The Clinical Effectiveness Group |

Full LHS |

|

6 |

The Children & Young People’s Health Partnership |

Full LHS |

|

7 |

The Secure Anonymised Information Linkage (SAIL) Databank |

Data |

|

8 |

Informatics Consult |

Data |

|

9 |

Towards a national learning health system for asthma in Scotland |

Data |

|

10 |

Reducing the health burden of diabetes with artificial intelligence-powered clinical decision tools (RADAR) |

Technology |

|

11 |

Cambridge University Hospitals’ eHospital programme |

Technology |

|

12 |

Project Breathe – artificial intelligence-driven clinical decision-making tools to manage cystic fibrosis |

Technology |

|

13 |

Thiscovery |

Learning community |

|

14 |

Q Lab UK |

Learning community |

|

15 |

HipQIP – hip fracture quality improvement programme |

Improvement |

|

16 |

Reducing brain injury through improving uptake of magnesium sulphate in preterm deliveries (PReCePT2) |

Improvement |

What are learning health systems and why do they matter?

1.1. What are learning health systems?

A learning health system (LHS) is a way of describing a team, provider or group of providers in the health and care system that, working with a community of stakeholders, has developed the ability to learn from its own delivery of routine care and improve as a result. At its most fundamental, an LHS comprises a set of activities and assets that enable continuous learning and improvement of services.

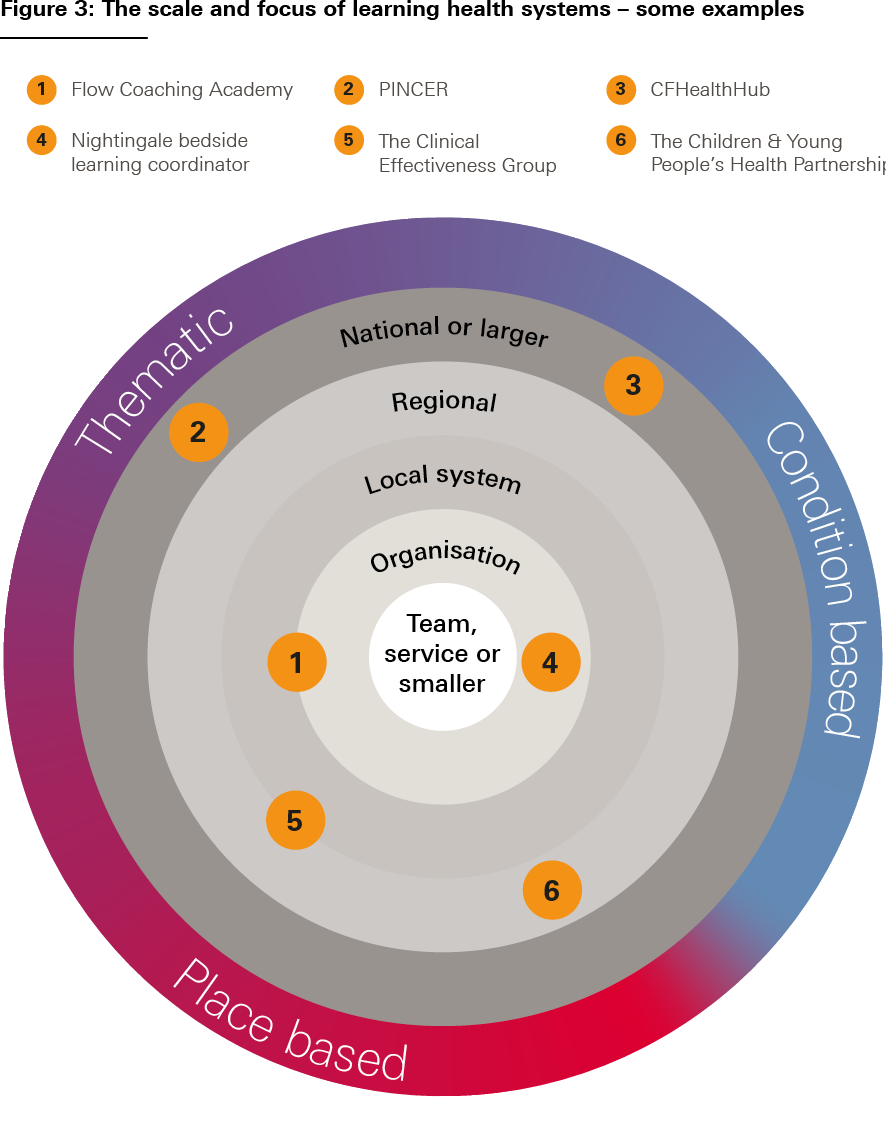

LHSs are an important method for improving the quality, efficiency and effectiveness of health and care services. But there are many different types of LHS, ranging from the clinical microsystem level to the national level and everything in between. As a result, the term tends to be used in many different ways. We think that this can, on occasion, be a stumbling block to making progress in this field – with people sometimes talking at cross purposes or using terminology in overly restrictive ways.

For that reason, we begin this chapter by developing an analytical framework for understanding the key components of LHSs and for characterising the variety of types that exist.

Common aspects of learning health systems

While there are many different types of LHS, they all have some key factors in common, although important variation can emerge in relation to these factors.

- The provision of services. At the core of an LHS sits a service provider or providers, and the desire to improve service provision and outcomes drives the LHS’s activity. An associated factor is that a key source of data from which an LHS learns is data generated from routine service provision (whether clinical data, operational data, patient-reported data and so on). This is one important thing that makes an LHS different from many types of research or trials relying purely on bespoke data collection. (Another important difference is the continuous, iterative nature of the learning that takes place within LHSs – discussed further below.) The presence of a provider means the type of improvement that LHSs do can be endogenous (driven from within) rather than simply exogenous (externally driven by factors such as policy or regulation).

One possible source of variation among LHSs is therefore the type and sector of the provider in question and the nature of services being delivered (for example, health care, public health, social care or community services). What is being improved will also affect who the ‘service users’ might be on any particular occasion (for example, patients, staff, carers or citizens).

- The learning community and improvement ambition. An LHS is driven by a learning community that has been formed around a common ambition of improving services and outcomes. Not everyone in the learning community will necessarily be involved in every stage of the LHS (for example, patients might be involved in formulating ideas and trialling service changes but not in data analysis; data analysts might be involved in generating learning from data but not implementing service changes; and so on). However, all share and contribute to the common ambition in some way.

Another critical source of LHS variation is therefore the nature of the learning community, and its corresponding improvement ambition:

- They could be place based, and if so they could exist over a range of geographies (for example, based around an individual provider organisation, a health economy or the whole NHS).

- They could be condition based (for example, improving care for people with cystic fibrosis).

- They could be thematic (for example, improving procurement, adopting a particular technology or reducing a particular type of medical error).

- They could combine these properties (for example, improving asthma care for young people in London).

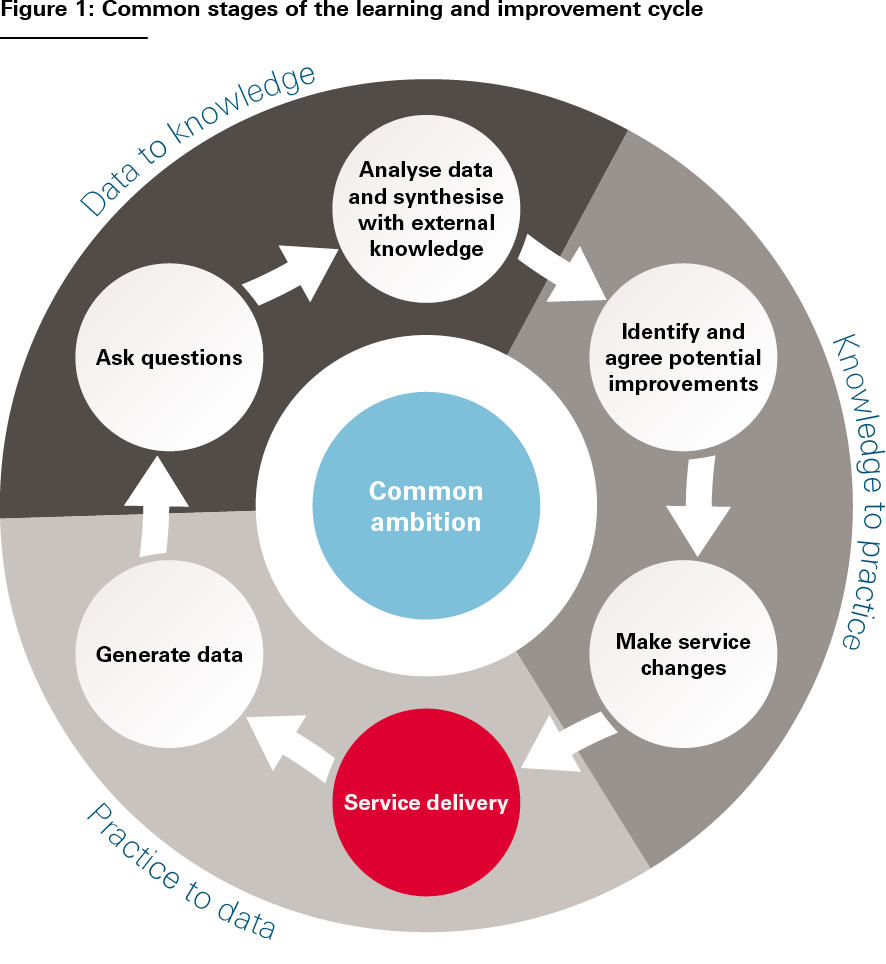

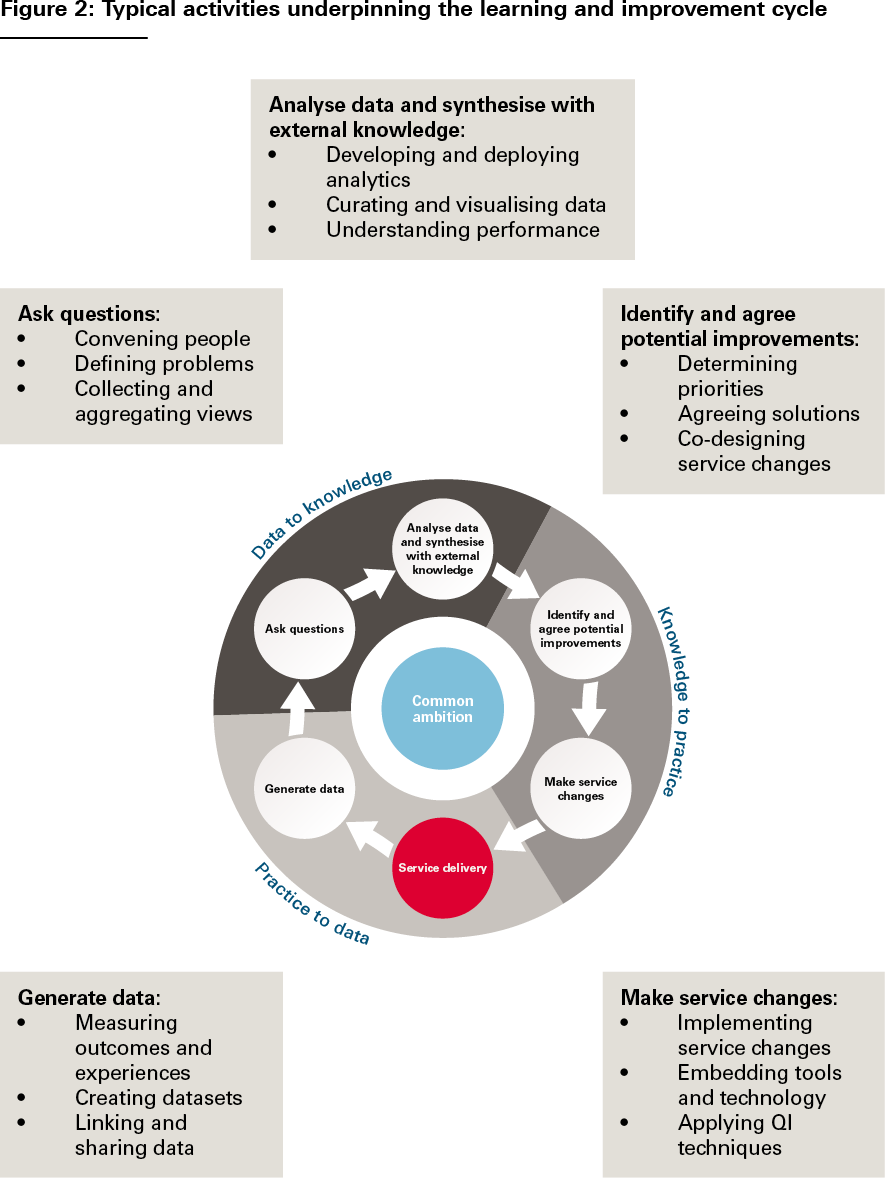

- The learning and improvement cycle. LHSs effect change through iterative learning cycles based on generating and learning from data and formulating and testing service changes. Despite the huge diversity of LHSs, their learning and improvement cycles tend to be based on a common set of stages, illustrated in Figure 1. And at each stage of the cycle, the same types of activities tend to be going on: measuring outcomes, formulating hypotheses, analysing performance, designing improvements, implementing service changes and so on (see Figure 2).,, The cycle is then repeated, allowing each subsequent iteration to test and evaluate the service changes implemented in the previous iteration. These activities are the ‘bread and butter’ of LHSs. And it is by focusing on how to do these activities well, we can support the development of LHSs, whatever their form.

Source: The Health Foundation’s Insight & Analysis Unit

Importantly, if it is the presence of certain activities that constitutes an LHS, then it does not really matter whether the individuals involved think of it as an LHS or not. As we will see with some of the case studies explored in this report, something can be an LHS even if the practitioners involved do not use that terminology or did not set out explicitly to develop an LHS.

Figure 1 is not intended to be a systematic analysis of the learning and improvement cycle, but simply a useful way to think about the constituent activities that go on in an LHS. It broadly corresponds to Charles Friedman’s influential three-stage characterisation of the learning cycle: practice to data; data to knowledge; and knowledge to practice (indicated in the figure).

Source: The Health Foundation’s Insight & Analysis Unit

However, something the classic tripartite LHS schema does not always make explicit is how values and intentions get into the cycle – how questions get asked and priorities for improvement get determined. And this is a more fundamental issue than just the initial selection of an improvement ambition – the need to ask questions, gather and aggregate views, reconcile differences, and make judgements and course corrections is an intrinsic part of the learning process. For that reason, we think that it is important to consider problem definition and solution design as explicit parts of the learning and improvement cycle – signified in Figures 1 and 2 by the actions ‘ask questions’ and ‘identify and agree potential improvements’. Indeed, as we will discuss further later, it is these fundamentally human processes of convening, interacting, deliberating and making decisions that make the social infrastructure of LHSs just as important as their technical infrastructure.

Differences in the way each stage of the learning and improvement cycle happens can be another important source of variation between LHSs. Each stage can differ in scale and intensity (appropriate to the LHS’s goals) – that is, in the depth and granularity of the work going on, the number of people involved, the timescale, the cost and so on. For example, the data analysis involved in a learning and improvement cycle could range from reading a patient feedback form to a lengthy research study involving novel and complex analytics, while the service changes could range from putting up a sign in a waiting room to redesigning a whole health care pathway.

The scale and intensity of different stages of the learning and improvement cycle will therefore greatly affect what the LHS looks like in practice. In particular, the greater the scale or intensity required, the more likely it is that different individuals will lead different aspects of the cycle, or that these stages will happen in different environments. While at the smallest scale, the stages of an LHS could be executed by a single individual in a single environment (for example, a clinician using real-time feedback in a mobile app to optimise their practice), at the other end of the spectrum might be a learning and improvement cycle that involves lengthy research projects, specialist engagement exercises, the lab-based development of new technology or data tools, or the cross-organisational implementation of new clinical pathways.

In summary, variation in the three aspects of LHSs outlined here – the nature of services provided, the nature of the learning community and improvement ambition, and the scale and intensity of each stage of the learning and improvement cycle – makes many different types of LHS possible. Figure 3 illustrates some of this diversity, using a selection of the case studies presented in this report.

Source: The Health Foundation’s Insight & Analysis Unit

These six case studies are presented at the end of Chapter 1.

1.2. The assets underpinning learning health systems

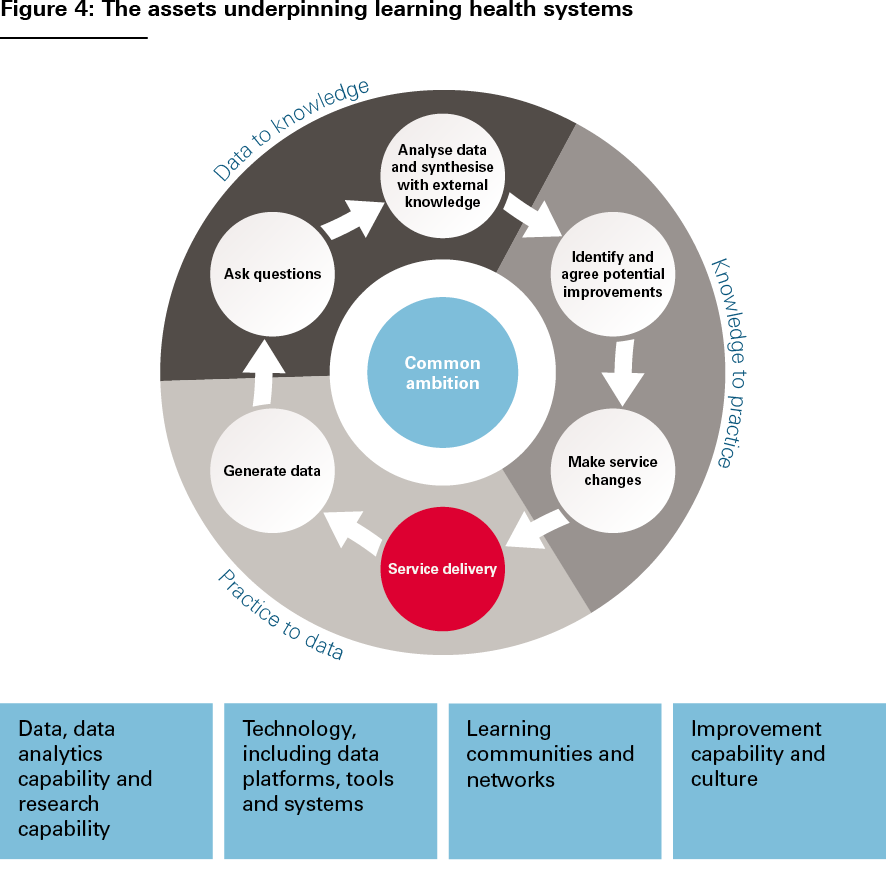

Another approach to thinking about LHSs is to consider the capabilities and infrastructure that typically underpin them – in other words, going beyond thinking about the activities of the learning and improvement cycle to consider the assets on which these activities rely.

These assets, illustrated in Figure 4, include:

- data, data analytics capability and research capability, including skilled researchers and analysts

- technology, including data platforms, tools and systems, as well as an organisation’s wider digital maturity

- learning communities and networks, along with mechanisms, spaces and support for convening, deliberating and sharing knowledge

- improvement capability and culture, along with resources to enable the planning, design and implementation of improvements to care.

Source: The Health Foundation’s Insight & Analysis Unit

As the scale of an LHS increases, these assets will tend to become more visible and significant. And, of course, they are not static: they will develop and mature over time with successive iterations of the learning and improvement cycle and successive projects. And there is a need to continually nurture them.

Importantly, a provider or learning community might exhibit some of the components of an LHS but not all of them. And it is worth emphasising that the activities, capabilities and infrastructure required for successful LHSs are valuable in their own right, even when they are not being used as part of a full LHS.

Focusing on these kinds of assets and how they can be successfully developed should therefore be an important aim in itself. In practice, when thinking about how to support the development and evolution of LHSs, rather than trying to create entire LHSs in a single step, it can be more effective to focus on developing one or two components first – for example data analytics or improvement capability. In many cases, it will be about identifying what components are already in place and building on these. LHSs are not ‘all or nothing’ in this respect. Nevertheless, ultimately, it will be by bringing all these different components together and ensuring they are working in partnership that the LHS will become more than the sum of its parts.

Furthermore, there are other important elements of learning infrastructure in health and care that complement the concept of LHSs described here, and on which LHSs rely to connect and share learning (see Box 3 for further discussion).

Box 3: The relationship between learning health systems and other types of systematic learning in health and care

LHSs as described here are only one way in which systematic learning and improvement can happen in health and care. Other approaches exist that are not necessarily provider centred, nor involve learning and improvement cycles, nor even focus on a particular improvement ambition. Examples include the role of networks in spreading innovation – for example, Q, a community of thousands of people across the UK and Ireland collaborating to improve health and care – or peer learning through clinical communities. These kinds of wider approaches may use similar infrastructure and capabilities as LHSs (networks, data, improvement capability and so on), but they often go beyond the reach and focus of individual LHSs.

An exploration of these broader approaches to learning is beyond the scope of this report. But it is worth noting that they may play a very important role in helping to create an environment in which LHSs can flourish. While the endogenous aspect of LHSs (driving change from within) is one of their strengths, it does mean there is a need for linking mechanisms between them. Without this, there is a risk of siloed improvement efforts.

These broader approaches can be particularly important in bridging between different LHSs – helping them learn from each other and tackle unwarranted variation between different providers. They may also provide critical pieces of infrastructure on which LHSs rely, for example, platforms for research and consultation like Thiscovery (see case study 13 in the next chapter). So, LHSs as described here should not be considered in isolation from the health and care system’s wider learning infrastructure.

1.3. How learning health systems can help

Why should providers and learning communities be supported to adopt an LHS approach?

First, LHSs can support the delivery of externally led change through national programmes by providing the means to implement changes, test them and iteratively adapt and refine them, working with the very patients and staff the changes apply to. For example, in England, there are significant opportunities to develop LHS capabilities within integrated care systems as a way of supporting the successful adaptation and embedding of new pathways and models of care. In this guise, LHSs can be thought of as sophisticated ‘implementation mechanisms’ that can help deliver national priorities for service transformation.

But LHSs also matter because they are critical for enabling locally led service change. Many of the challenges in health and care cannot be solved by top-down change programmes alone. And while local systems face common problems and many solutions are generalisable, there are also problems and solutions that are specific to individual contexts, which those closest to them will need to diagnose and solve.

By creating the capability to learn and improve from within, LHSs can turn providers into ‘engines of innovation and improvement’, driving improvement in a way that is not reliant on national initiatives or investment. And over the long term, endogenous, continuous improvement has the potential to achieve more than a series of centrally led improvement initiatives and may in many cases be more effective in achieving sustainable quality and efficiency gains.,

Another reason for the growing currency of LHSs is that they are an important way to capitalise on the increasing availability of data and analytical tools. In short, our ability to learn has never been greater. Crucially, developments in data and data analytics are giving providers themselves the power to gain insights about pressing challenges and how to solve them, reducing the need for external analytic capability. The increasing sophistication of technologies such as artificial intelligence also presents further significant opportunities for data-driven service improvement.

National policy has been slower to focus on LHSs than other drivers of health care improvement (such as targets, incentives and competition). However, interest in LHS approaches has grown over the past decade as the limitations of these more traditional, top-down policy levers have become apparent. In England, for example, the 2013 Berwick review set out a vision for the NHS to become ‘a system devoted to continual learning and improvement of patient care’ and this led to the government proposing that the NHS should become ‘the world’s largest learning organisation’. More recently, the 2021 Integration and Innovation White Paper contained the ambition of ‘accelerating [the system’s] ability to learn, adapt and improve’, while NHS England have argued that integrated care systems should become ‘consciously learning systems’. Meanwhile, Healthcare Improvement Scotland sees the development of ‘human learning systems’ as a key part of its approach to quality management. So there now appears to be acceptance at the national level that building a culture of continuous learning and improvement is essential for improving quality, efficiency and effectiveness.,,

For all these reasons, LHSs are an idea whose time has come. Not only are there increasing opportunities to deploy them and increasing interest from national policymakers, but we will not be able to solve the huge challenges that services are facing adequately unless we fully exploit the potential of providers to learn and improve. Box 4 explores where the greatest potential for further development might lie.

The chapter concludes with six case studies from the UK of LHSs of varying scale and focus. And to gain insights from other countries where LHS approaches are used, Box 5 gives three international examples.

Box 4: Development opportunities for learning health systems – what our respondents said

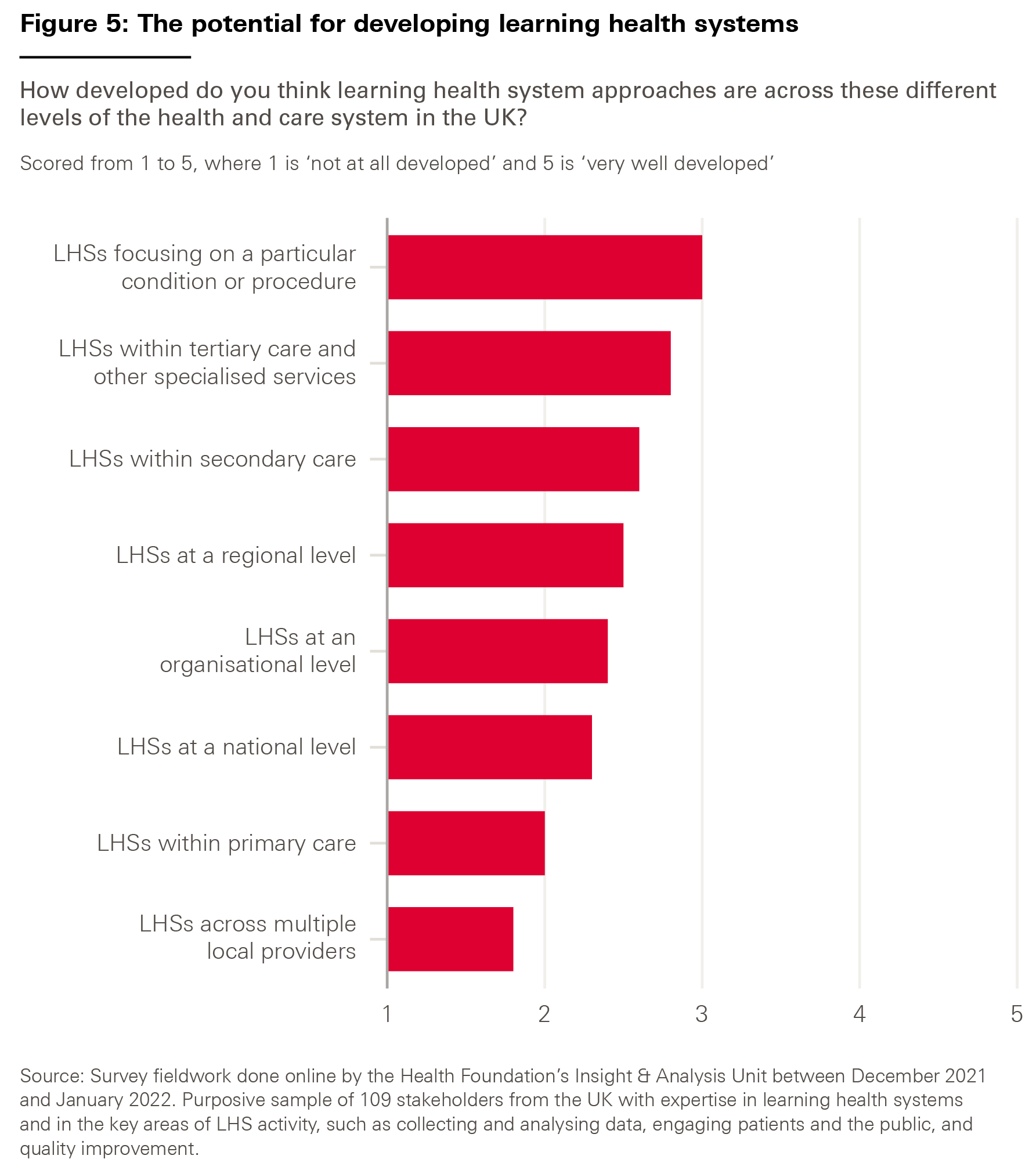

Given the diversity of possible types of LHS, we asked our survey respondents how developed they thought LHS approaches currently were across different levels of the health and care system – with an eye to understanding where the greatest potential for further development might lie.

The results, shown in Figure 5, suggest that LHSs have scope for development at all levels. But respondents felt that LHSs across multiple local providers, such as integrated care systems and provider collaboratives, were the least developed – perhaps unsurprisingly given the nascent state of these structures.

The effective movement of patients between departments and organisations, along pathways of care, and around the wider health and care system, is an essential part of delivering safe, timely and high quality care. Poor flow is a major contributing factor to adverse outcomes, readmissions and higher mortality rates, whereas good flow can improve outcomes and waiting times, reduce duplication and improve efficiency.

Set up by Sheffield Teaching Hospitals NHS Foundation Trust in 2016, the Flow Coaching Academy empowers teams to improve flow through a common purpose, language and quality improvement method. Through open, inclusive and non-hierarchical safe spaces called ‘Big Rooms’, teams collaboratively identify, develop and test local solutions informed by qualitative and quantitative data. Critically, each Big Room starts with a patient story to make sure their voice is a central part of the process – whether through a clinician telling a patient story or inviting patients to Big Room meetings.

The Flow Coaching Academy’s Roadmap for Improvement and ‘5Vs Framework’ underpin each Big Room, which provides a way for teams to assess a pathway and develop a shared understanding. Flow coaches, who have undertaken a one-year action-learning programme to develop relational and technical skills, including data analysis and coaching, work with teams to identify and achieve sustainable improvements to care within and across pathways.

The Flow Coaching Academy has delivered training to nearly 400 coaches from NHS trusts, clinical networks, charitable organisations and health boards across the UK. It has developed a network of local academies and training is currently taking place in Northumbria, Lancashire & South Cumbria and Sheffield.

The Big Room approach shows the importance of creating a learning culture where teams have the tools, opportunity and time to collectively define and implement improvements to service delivery. Part of the success of the Big Room, which emphasises that improvement is ‘20% technical and 80% relational’, is the focus on building multidisciplinary teams and a shared understanding, empowering all members to contribute.

Coaches encourage teams to take ownership of both the learning and improvement process and the data that inform it, which helps to develop better relationships across professional disciplines, including between clinical staff and data analysts. This is essential in building understanding of service performance.

Case study 2: PINCER – a pharmacist-led intervention to reduce medication errors

Medication errors, such as mistakes with prescriptions, preparation or dispensing, occur more than 237 million times a year in England. While most are minor, in an estimated 1.8 million cases these medication errors could lead to serious patient harm.

Researchers at the Universities of Nottingham, Manchester and Edinburgh developed PINCER, a pharmacist-led intervention that combines clinical audit tools with quality improvement methodology and educational outreach. Through the PINCER online resource centre, pharmacists can download searches to run on GP clinical systems that identify patients at risk of medication error. Pharmacists can compare their data to other practices across the country and then work with practice teams to improve prescribing processes and reduce potential harms.

PINCER goes beyond simple feedback tools by providing training through action learning sets that give participants the resources and skills needed to drive improvement and embed changes into everyday practice. Pharmacists develop skills in using quality improvement tools and strategies, root cause analysis, action plan development and delivering feedback. The action learning sets model has also provided participants with informal peer networks to support continuing development. More than 2,350 health care professionals have now been trained to deliver PINCER, including 1,785 pharmacists.

Supported by the Health Foundation and all 15 Academic Health Science Networks, the initiative, led by PRIMIS at the University of Nottingham, has now been adopted by more than 40% of GP practices in England through a social franchising model. This model has given individual localities the flexibility to tailor the intervention to their needs, which has been critical to its successful scaling. As a result, more than 220,000 at-risk patients have been identified, and analysis of follow-up data from 1,677 practices has shown a reduction of 32% in the number of patients at risk of hazardous prescribing associated with gastrointestinal bleeding – a common cause of medication-related hospital admissions.

Case study 3: CFHealthHub – a digital learning health system

Around 15 million people in England are living with at least one long-term health condition, accounting for 70% of health and care expenditure. However, it is estimated that up to half of all medicines prescribed in the UK for long-term conditions are not taken as recommended, with poor adherence to medical treatment having both a personal and an economic impact.

For the 10,600 people living with cystic fibrosis in the UK, daily inhaled medicines are vital for staying healthy, but only around 36% of people with cystic fibrosis are fully adherent to their complex treatment plans. To address this challenge, CFDigiCare, a collaboration of clinicians and people with cystic fibrosis, developed CFHealthHub – a digital LHS that seeks to optimise cystic fibrosis outcomes by creating a national community of practice that uses data to improve care.

Through a digital platform co-designed with users, people with cystic fibrosis can track their progress by accessing real-time medication data captured by their Bluetooth-enabled nebuliser. The CFHealthHub mobile app shows these data through accessible, colour-coded graphs that give feedback on treatment-taking.

Users can also choose to share the data with their clinicians, who then work with them to support behaviour change, identify barriers to effective treatment and talk through evidence-based strategies for overcoming them. A 19-centre randomised control trial showed that CFHealthHub increased adherence to treatment while reducing the burden and effort of self-care.

As of May 2022, CFHealthHub is used by 60% of adult cystic fibrosis units in England, creating a learning community of clinicians, managers, pharmacists and allied health professionals who are sharing their learning and best practice. Using the real-time automatic data capture of CFHealthHub, this community of practice is able to understand how well the system is supporting people with cystic fibrosis.

This has led to, and provided the infrastructure for, several linked, systems-optimisation workstreams. For example, the National Efficacy-Effectiveness Modulator Optimisation programme is carrying out a real-time health technology assessment of new medication which can significantly improve lung function, which is able to use data from 1,000 participants. The CFHealthHub has also shown how data gathered by technologies can be built into care, without burdening the patient or clinician, and how they can be used to both support system learning and improve personalised support for people with long-term health conditions.

Case study 4: Nightingale bedside learning coordinator

During the onset of the COVID-19 pandemic, NHS England set up NHS Nightingale Hospital London (the Nightingale) as a temporary facility in an east London convention centre to cope with the rising number of critical care patients in London. The novel setting, set up quickly with newly formed teams, meant the Nightingale had to manage significant risk and potential human error. In light of the knowledge gap surrounding COVID-19 and the need for rapid implementation of learning about the disease, the Nightingale was purposefully designed to be an LHS. The LHS approach enabled the Nightingale to rapidly make decisions backed by data and evidence to improve the delivery of care, quickly monitor the impact and make iterative adjustments where necessary.

A key component of the LHS involved gathering staff insights and ideas for improvement. The bedside learning coordinator role was developed as a mechanism to gather these insights rapidly and continuously without creating a burden for staff. The role involved:

- capturing staff insights into what was and was not working

- rapidly feeding these insights back to the leadership teams to review and agree how to respond

- implementing agreed changes as appropriate

- enabling robust feedback loops.

Staff from a diverse range of professional backgrounds (both clinical and non-clinical) undertook bedside learning coordinator shifts to give a broad set of perspectives and insights.

Insights captured were triaged into three areas: fix (requiring immediate action), improve (needing suggestions for better ways of doing things) and change (requiring substantial changes). Bedside learning coordinators worked with a central quality and learning team to triangulate insights from the bedside with other data sources, such as incident reports, team debriefs and performance dashboards as well as external evidence, to inform decision making and implement required actions as appropriate. In addition, as well as external evidence they carried out focused audits to confirm that implemented changes were successful, satisfactory to staff and sustainable. One example of this in action was the identification of mouth care as an area for improvement. Following concerns that staff had raised, a speech and language therapist completed a bedside learning coordinator shift to give specialist insight and recommendations. These were then adopted as standard operating procedure.

The Nightingale demonstrates that health care staff often have rich insights and ideas for improvement (including how to improve patient care, workplace efficiency and staff wellbeing), which, when analysed alongside other routine data sources, can support improvement work. The bedside learning coordinator role provides a mechanism to gather these insights, as well as giving staff a greater voice and empowering them to deliver tangible improvements as part of a wider LHS.

Since the initial pilot, several other large NHS organisations have adopted the bedside learning coordinator concept.

Case study 5: The Clinical Effectiveness Group

Data sharing between organisations within health and social care is often disjointed, leading to limited sharing of learning and the duplication of work between providers. As general practice moves to a model where bigger operational units – such as integrated care systems, primary care networks and GP federations – support service users with more integrated care, there is an opportunity to pool learning to support continuous improvement as part of an LHS.

The Clinical Effectiveness Group (CEG) at Queen Mary University of London is an academically supported unit that facilitates data-enabled improvement for 272 north-east London GP practices, serving 2.2 million patients. It brings together people from a range of disciplines, including clinicians, data analysts, informaticians, academic researchers and a team of facilitators who conduct around 300 GP practice visits a year.

The CEG builds standardised data entry templates that GP practices use to enter high quality data into their patient records at the point of care. Its software tools, searches and on-screen prompts then turn these data into actionable insights within the practice, for example to stratify patients by risk or to support self-reported measurements such as home blood pressure recording.

The CEG’s cardiovascular disease tools have contributed to improvements in blood pressure control, statin use and the management of other associated long-term conditions in the local population, with pre-pandemic performance among the highest in England. For example, pharmacists in the London Borough of Redbridge, in collaboration with St Bartholomew’s Hospital, are using one such tool – APL-CVD (Active Patient Link tool for Cardiovascular Disease) – to improve statin prescribing and identify suitable patients for a new drug that reduces cholesterol.

CEG analysts also create interactive dashboards showing performance across the region, allowing for the identification of areas requiring improvement. The CEG uses this evidence to design and deliver local guidelines and quality improvement programmes to reduce unwarranted variation in outcomes. The most recent is a programme to reduce inequalities in childhood immunisations. The CEG has championed GP recording of self-reported ethnicity to support the identification and reduction of health inequalities. The dashboards similarly reflect information on a range of equity indicators that local authority public health teams use to inform local initiatives.

Evaluation of the CEG identified key contributors to its success including:

- access to high quality coded GP data from across north-east London

- trust and credibility in its use of data

- engagement with local clinicians and health care providers

- the expertise of its clinical leads.

The CEG’s approach has put health data into practice to build an LHS in north-east London. The team is now working with other integrated care systems in London to support this approach in other areas as part of the London Health Data Strategy.

Case study 6: The Children & Young People’s Health Partnership

Research shows that some health systems are struggling to keep pace with the changing health needs of young people, and wide inequities in health remain among this group. With more than 180,000 children and young people living in the densely populated, diverse and fast-growing London boroughs of Lambeth and Southwark, an integrated approach to the delivery and coordination of care for this rapidly evolving population is essential.

The Children & Young People’s Health Partnership (CYPHP), hosted by Evelina London Children’s Hospital and part of King’s Health Partners, is a population-level LHS aiming to deliver better health for children and young people. Bringing together providers, commissioners, local authorities and universities, the CYPHP collaborates on taking care into the community, uncovering unmet need, and targeting care through technology and data-enabled early identification and intervention.

One of the CYPHP’s focuses is asthma. Data are gathered from several sources, including biopsychosocial data through a patient portal, routine clinical interaction data, data on wider determinants of health such as poverty and air quality, and data gathered through research that patients can opt into through the patient portal.

The team of clinicians, managers and researchers then translate these data into action by using them to make personalised decisions about patient care, support decisions on triage and inform what packages of care might be needed. The data are also used to inform population health management approaches by identifying which geographic areas have the greatest need, enabling earlier intervention.

The data are also being used for wider quality improvement and research activity. For example, through local test beds, the CYPHP is using a pragmatic but rigorous approach to evaluation by running randomised control trials alongside service evaluations that can quickly provide evidence to clinicians to support continuous improvement.

The CYPHP has demonstrated impact through a service evaluation, which showed improved health outcomes and quality of care as well as reductions in emergency department contacts and admissions. Our interviews with the team highlighted that by understanding population need through data, it is possible to deliver care that is proportionate to need and that can therefore help reduce inequalities in access to care among children, alongside reduced associated costs.

Box 5: International examples of learning health systems

While the case studies and examples featured in this report are from the UK, it is worth noting that there are many instances of LHS approaches being taken in other countries. Below we highlight three examples.

ImproveCareNow, United States

ImproveCareNow was set up to improve care for children and adolescents with inflammatory bowel disease in the US, which had seen significant variation in terms of both diagnostic testing and treatment. Through the setting up of a ‘collaborative learning network’, ImproveCareNow brought together a community of clinicians, researchers, patients and parents to use routine data for research and continuous improvement. All patients with inflammatory bowel disease within the network are now enrolled in a single patient registry, allowing ImproveCareNow to assess the impact of improvements on outcomes. Since its inception in 2007, ImproveCareNow has seen remission rates increase from 55% to 77%, and the network has grown to provide care for more than 17,000 patients across 30 states of the US.

Swedish Rheumatology Quality Registry, Sweden

By building on routine care data and their existing ‘outcomes dashboard’, clinicians in Sweden’s Gävle County were able to improve outcomes for patients with rheumatic diseases, going from having the worst outcomes in the country to the best. Patients were supported to use their data at home to understand when they might be out of remission. They were also able to use the information to control their care through an ‘open–tight’ model: when patients were doing well, they were ‘open’ to visiting a clinician if they felt they needed to and were supported to self-care, but if they were not doing as well, they would be ‘tightly’ cared for until that care was no longer needed. This approach both decreased unnecessary attendances and encouraged self-management approaches that saw outcomes improve substantially.

Johns Hopkins Medicine’s ‘learning and improving system’, United States

In recent years, Johns Hopkins Medicine has introduced an organisation-wide LHS approach that seeks to break down traditional silos between research and practice in order to improve patient outcomes and reduce waste. Bringing key leaders together around a clear and compelling, patient-centred purpose, its ‘learning and improving system for quality and safety’ is underpinned by a wide-ranging learning community, but with clear links to management for accountability. By aligning its goals and strengths across a broad range of stakeholders, the approach has seen significant improvements in a range of areas, including reductions in surgical-site infections of more than 50% and significant improvements in patient feedback.

Four key areas that can support progress on learning health systems

2.1. Introduction

As highlighted in Chapter 1, there is growing interest in learning health systems (LHSs) as a vehicle for improving care quality and service delivery. But given that LHSs are multifaceted and can be highly complex, it can be difficult to know where to begin. So, what should policymakers, and organisational and system leaders, be focusing on to support the development and spread of LHS approaches?

Our research and engagement with expert stakeholders highlighted four key areas where targeted action could help to advance the use of LHS approaches:

- learning from data

- harnessing technology

- nurturing learning communities

- implementing improvements to services.

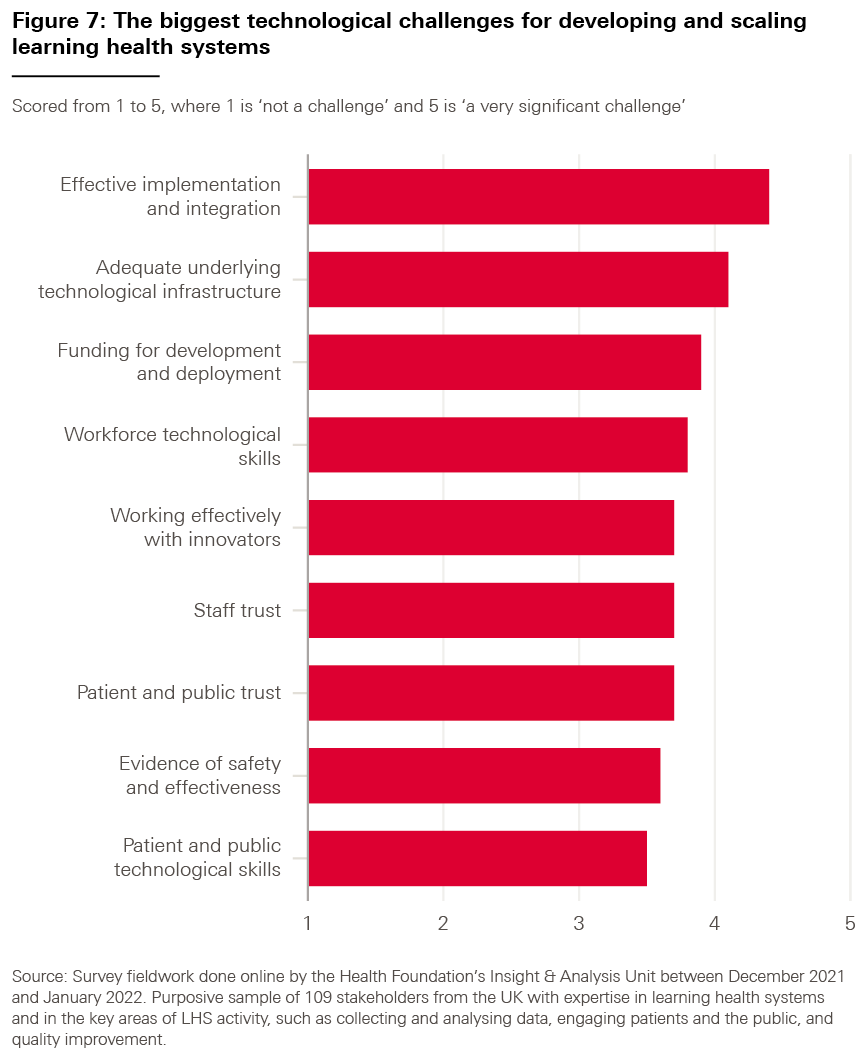

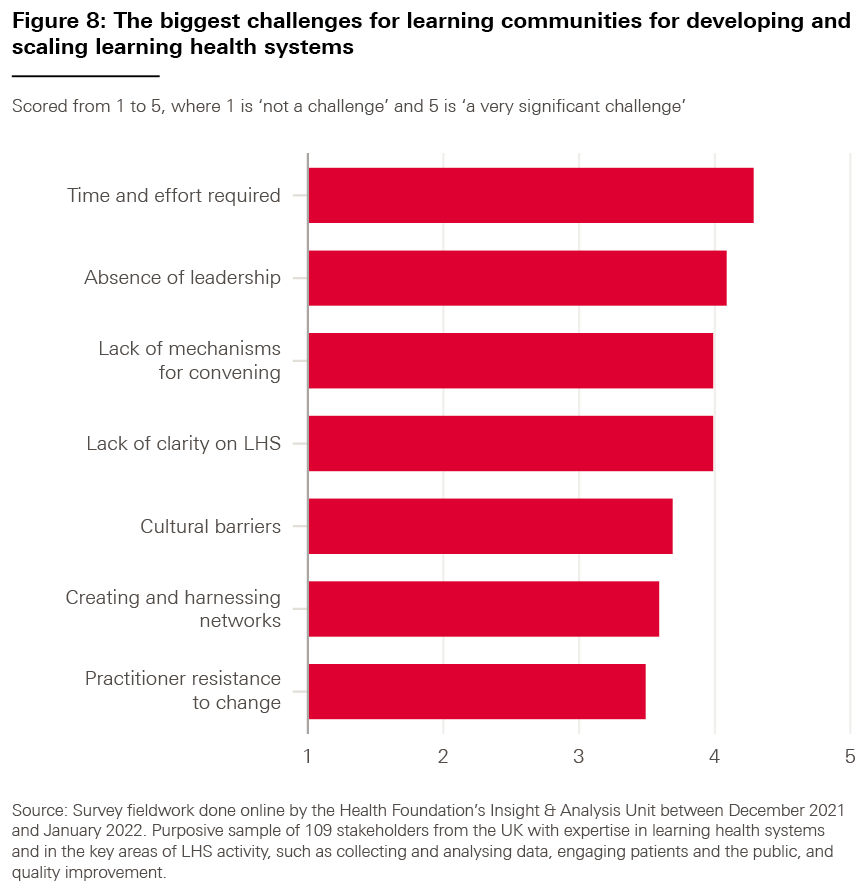

These four areas, which link to the assets and capabilities underpinning LHSs as described in Chapter 1, form key lines of enquiry in this chapter. Specifically, we discuss each of the four areas in turn, setting out the current context, opportunities and challenges. In each case, we also report the results of our stakeholder survey to identify which of the challenges we describe currently require the most attention.

2.2. Learning from data

2.2.1. Key issues and opportunities

The potential of data for learning health systems

Better use of data has huge potential to improve the quality, safety and cost-effectiveness of care and address unwarranted variation, across the whole health service. The data can be quantitative or qualitative and be drawn from many sources. As discussed in Chapter 1, one of the characteristic features of an LHS is that it uses data generated from routine care delivery – whether clinical, operational or patient-reported. However, LHSs are of course not restricted to using these data and can draw on data from a wide range of sources, such as patient surveys, research and clinical trials, and increasingly data from technology such as smartphones and wearable devices (wearables), like smart watches and medical technologies, that are worn by individuals to track, analyse and transmit data.

Within quality improvement work, it is not uncommon to hear people distinguish between ‘data for care’ and ‘data for improvement’. But a more useful approach is to distinguish between the primary purposes of health care data (delivering direct clinical care) and the secondary purposes of such data (such as research, population health management and improvement). Both uses of data support the work of LHSs, in particular in the design of services and helping to build understanding about how they are performing and how they can improve.

As the volume, breadth and quality of data increase, so too do the opportunities for learning and improvement. Wider sources of data, such as data generated by smartphones and wearables, and data on the social determinants of health, are increasingly playing a valuable role by allowing us to build a more holistic picture of our populations. SAIL Databank (case study 7), a Trusted Research Environment that contains data about the whole population of Wales, shows how this wide range of data can be brought together.

Combining and analysing data from different sources can generate new clinical evidence. The Informatics Consult project (case study 8) shows how this can be done. It is particularly important for supporting treatment decisions for patients with complex health needs, where traditional forms of evidence are lacking.

Understanding the health and needs of people and their communities

The move towards better collaboration between health and care providers and commissioners – through, for example, health boards in Scotland and integrated care systems in England – provides an opportunity to improve the health of their populations. By integrating infrastructure, developing standards for interoperability (the ability of two or more systems to exchange information and use that information) and working collaboratively, these organisations can identify where within their populations the greatest needs lie, and work together with stakeholders, including members of the community, to design data-driven interventions to address those needs.

There are particular opportunities for better use of patient-reported data. But while the NHS collects a large amount of patient-reported data, they could be used much more effectively. This is where an LHS approach can help because it centres on the ambition of putting knowledge into practice.

Data collected from communities offer the opportunity to help health care services better understand what matters most to them. This includes national collections such as patient and service user experience surveys and patient-reported outcome measures, as well as local routes, from focus groups to service user surveys. Initiatives such as the Networked Data Lab, which the Health Foundation leads, are linking these datasets together to build a more complete picture of the relationships between the wider determinants of health, health needs, service use, patient pathways and health outcomes.

Data about particular health conditions, including on diagnosis, treatment and outcomes, also offer potential for LHSs – including the possibility of creating LHSs around particular conditions. Clinical registries and clinical audits, for example, allow comparisons between multiple sites, reveal variation in processes and outcomes, and identify where improvements can be made.

Making data easy to understand and actionable

While the growth in the volume of data means there is ever more knowledge that can be generated, the amount of data already exceeds what people working in health care can assimilate. There are other challenges too. Often data are not presented in a useful way, and long time lags (between data collection and use) mean that they can be too old to have relevance to health care decisions today. For example, as patient registries become able to collect data on patients’ priorities in real time, incorporating patient-reported data with clinical data, they could become a critical part of the infrastructure of LHSs where patients, clinicians and scientists work on service improvements together.

So, there is considerable scope to improve both the curation of data (the organisation and management of data from various sources) and the visualisation of the data – along with how and when the data can be accessed, so that health care professionals can see relevant insights in a timely and digestible way to support their decision making. For example, the Clinical Effectiveness Group at Queen Mary University of London (case study 5) has created interactive dashboards showing performance across GP practices in north-east London, allowing for the identification of areas requiring improvement.

Elsewhere, academics at the University of Edinburgh have developed a dashboard to support GPs to improve care for people with asthma across the UK (case study 9). The dashboard gives GPs weekly data on asthma indicators at their practice and shows how the data compare with those for other practices.

Building data analytics capability

Data analytics methods, tools and skills – including novel statistical models and data linkage – are a critical part of LHSs because they generate new knowledge and insights to support the learning and improvement process.

Our research and funding programmes at the Health Foundation show that the data analytics capability that exists in the health and care system is both underdeveloped and underused.

The impact of data analysts could be increased considerably through training and professional development, and through better access to the software tools required to generate insights from data. Specialist analytical expertise can also help to develop data literacy in the wider health care workforce. For example, the Flow Coaching Academy (case study 1), a model centred on a multidisciplinary micro-team approach to improving service delivery, shows how this potential can be exploited. By bringing data analysts into multidisciplinary team meetings known as ‘Big Rooms’, and coaching clinical staff in how to interpret data, the model enables teams to gain a better understanding of how services are performing and, critically, where improvements could be made.

Evaluating improvements

Testing and evaluation – which rely on collecting the right data – are a critical part of an LHS to show whether changes made have led to improvements. This mostly happens as an integral part of the iterative learning process, with the data collected in subsequent learning cycles being used to assess the service improvements implemented in previous cycles.

There are promising ways in which testing and evaluation can be done that marry robustness with timeliness. For example, the Children & Young People’s Health Partnership (case study 6) shows how running different evaluation models simultaneously can effectively support the learning and improvement process. By running randomised control trials at the same time as service evaluations, the partnership can give evidence quickly to clinicians to inform care improvements, and to commissioners and managers who can make rapid decisions about embedding new services into ‘business as usual’, while building a robust evidence base for children’s health care more widely.

2.2.2. Challenges

While some health care services and systems have made great progress in collecting and using data, our research suggests there are a range of challenges that stand in the way of using data to drive continuous learning and improvement in the manner that is required for LHSs. Several of these challenges have been highlighted in the government’s recent strategy Data Saves Lives, which also sets out steps to make progress on many of them.

LHSs require the availability of high quality, actionable data on a range of issues, including performance, outcomes, experiences and processes. This has been a particular challenge for patient data as progress towards the digitisation of patient records has been slow. Where data are recorded electronically, they do not always meet quality standards, nor are they always useful. Data can be incomplete or captured incorrectly.

Stakeholders we spoke to during our research told us that there are significant issues surrounding data access and sharing, including information governance and data security, which can limit the work of an LHS. In order for a team to be able to access data, a range of approvals are required, which vary in number and complexity due to a lack of standardisation. In addition, the regulation of data often lags the rate of innovation and the data that it produces. Financial costs associated with data access can also hamper the ability to use data to learn and improve. Health and care data must be in a readable, actionable format to have value, which often relies on data system vendors curating and cleaning the information they hold, and they can then charge sizeable fees.

It can be difficult to know what approvals are required to access data and from whom. For example, innovators involved in the RADAR project (case study 10) told us how introducing the MyWay digital health app into some parts of England required data-sharing agreements with each data controller. This included individual GP practices, clinical commissioning groups and Caldicott guardians (who are responsible for protecting the confidentiality of people’s health and care information and making sure it is used properly) compiling population-level data for the area, which took an enormous amount of time and effort to achieve. Conversely, use of the platform in north-west London was far simpler as data access is dealt with at the integrated care system level, where there is a structure for data controllers signing off electronically, and a population-level dataset already exists.

Our experience of funding data analytics projects at the Health Foundation suggests that linking and integrating datasets can also be particularly difficult, not only because of technical challenges, but also because of the way in which increased data linkages can challenge processes of anonymisation. The number and complexity of processes required to link data can be particularly challenging. For example, in the LAUNCHES QI study (linking audit and national datasets in congenital heart services for quality improvement), run by University College London, which sought to improve services for people with congenital heart disease by linking five national datasets, the process took 2.5 years to achieve, requiring nearly 50 documents for the data application processes, which needed to be submitted 162 times in total. Stakeholders told us that there are also challenges with integrating datasets, and insights derived from them, within electronic health records and that integrating analytical tools into electronic health records can be both difficult and very expensive.

System interoperability – the ability of two or more systems to exchange information and use that information – continues to be a challenge in health and social care. With an assortment of different data systems, and a lack of common standards, it can be difficult to gather and aggregate the data needed to drive an LHS. A 2020 National Audit Office report on digital transformation in the NHS in England highlights that while interoperability has been a priority for policymakers since 1998, progress has been slow, and a lack of clarity on standards to encourage new suppliers to enter the NHS could make interoperability harder to achieve.

For health care services to learn about and design the most effective interventions, they require data that go beyond routine clinical data – for example, data on the social determinants of health. Yet access to a wide-enough range of data can be challenging and many types of information are not captured in datasets. For example, routine NHS data do not include data on those who do not access health services, nor do they include information about a patient’s health in between interactions with the health service – both of which could improve understanding of the drivers of ill health.

The disproportionate impact of the COVID-19 pandemic on some groups has rightly highlighted the importance of tackling health inequalities, but to do this requires data that are representative of all patient populations and free from bias, which is often not the case. This can be due, for example, to a failure to capture data on characteristics (such as ethnicity) accurately, a lack of representation of different demographic groups in research or a lack of access to technologies that capture data., Such factors can render datasets biased, which can lead to decisions that either do not bring about improvements to service delivery or – worse – risk adverse outcomes and experiences for some service users. This is a particular concern for data-driven health technologies such as those using artificial intelligence and machine learning.

Understanding Patient Data research shows that most people support sharing patient data for individual care and a high proportion of people support sharing patient data for research where there is public benefit. But making sure that patients and the public, and also the health care workforce, perceive the collection, sharing and use of data to be trustworthy can be a challenge. Transparency and open dialogue with the public are important ways of achieving this but are not always addressed sufficiently.

Data need to be formatted and ‘cleaned’ to allow them to be analysed. This can be laborious and time-consuming work, which often fails to get the attention and resources it requires and is often done on an ad-hoc basis, leading to duplication of data. Both this data curation work and the subsequent data analysis require specialist skills and knowledge, and while there have been moves to build this capability across the health and care system – including through NHS England’s work to develop a National Competency Framework for Data Professionals in Health and Care – more is required. Another related issue is more general health data literacy across the workforce, so that staff, including those in management and leadership positions, can interpret data appropriately.

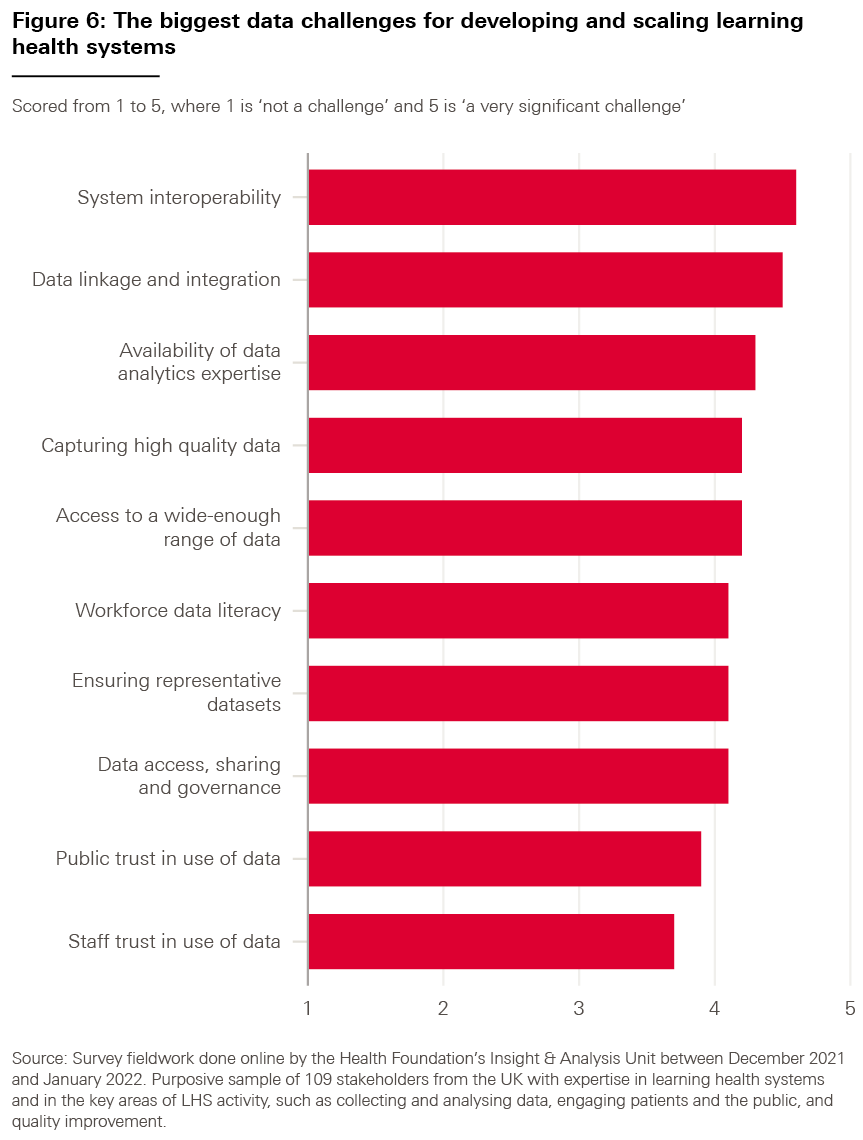

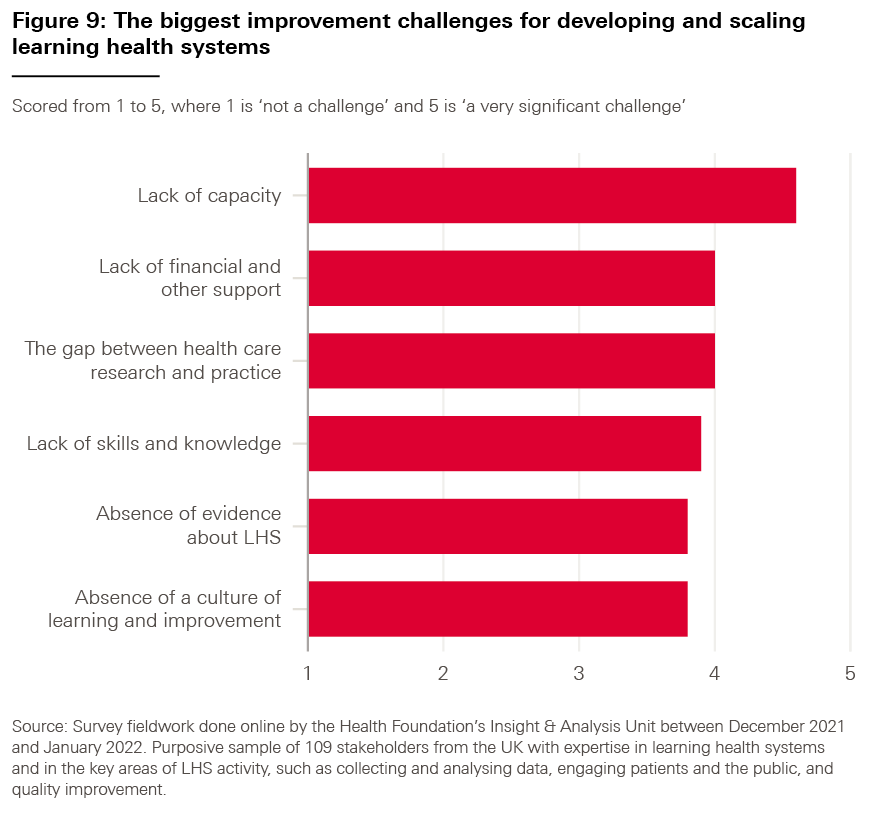

We asked our survey respondents which of the data challenges highlighted in bold above they considered to be the most significant for adopting LHS approaches; the results are shown in Box 6. (For each part of our survey, we also asked our respondents to tell us whether there were any challenges we might have missed, but the responses suggested that there were no significant omissions.)

Box 6: Data challenges – what our respondents said

As shown in Figure 6, the results indicate that all proposed factors were thought to be a challenge to some degree, averaging a score of 4.15 out of 5 (with 5 representing ‘a very significant challenge).

However, the highest-ranked challenge was ‘interoperability between data systems, both within and across organisations’ (4.6), followed by ‘linkage between datasets and integration of these datasets with electronic health records’ (4.5).

Case study 7: The Secure Anonymised Information Linkage (SAIL) Databank

Established in 2007, the Secure Anonymised Information Linkage (SAIL) Databank is a Trusted Research Environment holding anonymised individual-level data for the whole population of Wales. One of the world’s first Trusted Research Environments and hosted within Population Data Science at Swansea University, SAIL was set up to use data gathered in health and social care delivery to better inform research, improve services and inform population health strategy. It includes data that clinical interactions and interactions with social and community services generate, allowing for analysis of links between health outcomes and social factors.

SAIL operates according to a ‘privacy-by-design’ model, which uses physical, technical and procedural measures to safeguard the data it contains, prohibiting the sharing of data outside the databank without special dispensation. Also, to gain access to data held in the databank, prospective researchers must undertake a two-stage application process, which an independent Information Governance Review Panel assesses.

Population Data Science created the Secure eResearch Platform (SeRP), which powers the SAIL Databank, and allows researchers from across the world to access data linkage services and a wide range of data to answer important questions with the use of analytical tools. By 2020, SAIL had more than 1,200 registered users and has been used to deliver more than 300 research projects, including the development of National Institute for Health and Care Excellence (NICE) clinical guidelines. Strong relationships with many partners – such as Digital Health and Care Wales, the Welsh government and Public Health Wales – underpin the SAIL approach, which has enabled the use of data to inform decisions in policy and practice, notably those made in response to the COVID-19 pandemic through the One Wales collaboration.

From the beginning, the SAIL team recognised that public trust in the handling and sharing of personal data would be critical to the databank’s success. This trust relies on several interacting factors such as cultural values, personal preferences and mass media influences. To gain public trust, the SAIL team developed a programme of public involvement and engagement to assess public opinion and gain input into policies and practices, which a consumer panel – including members of the public – oversaw. The consumer panel also advises on routes and methods to engage with the population, recommends how information can be shared with the general public and assists in reviewing proposals from researchers applying to access the databank. Projects that have used SAIL are shared on its website, including a description and a list of outputs, to make sure there is communication and transparency with members of the public and stakeholders.

Case study 8: Informatics Consult

A Health Data Research UK (HDR UK) and Health Foundation Catalyst project

While clinical guidelines play an important role in health care delivery, they are not always backed by a robust evidence base in the form of clinical trials and research. This is made more challenging as the number of people with comorbidities increases and the health needs of the population become more complex, with there being many situations where a treatment indication and contraindication coexist for one patient, for example patients with both heart failure and kidney failure. Given that treatment for one condition can have an adverse impact on another, and there is limited evidence for some treatment options partly due to randomised control trials frequently excluding patients with several comorbidities, it can be difficult for clinicians to determine the best treatment for their patients.

The growing availability of large datasets and the tools to analyse them provides an opportunity for improving decision making, particularly for patient groups for whom robust evidence does not yet exist. The Informatics Consult platform allows clinicians to select a health condition and order an analysis of large datasets to aid decision making for the specific patient in front of them. The platform employs automated approaches for creating analysis-ready cohorts using the ‘DExtER’ tool. Within hours, the platform returns easily interpretable clinical information – including on the potential benefits of treatment, prognosis and mortality risk – which can support more personalised treatment plans.

Drawing on population data contained within electronic health records, the platform presents analysis in a way that is understandable for clinicians and can be discussed with their patients. This is not always straightforward, though. For example, many rare conditions do not have specific clinical codes, which leads to challenges incorporating them into the platform.

The project team plans to test the Informatics Consult platform in four NHS trusts to support decision making with patients who have both liver cirrhosis and atrial fibrillation, where the use of anticoagulants can treat the latter while making the former worse. A pilot conducted using Informatics Consult generated new clinical evidence for patients in this group, showing that the initiation of warfarin was common, and may be associated with lower all-cause mortality and may be effective in lowering stroke risk. Surveys of clinicians using the platform showed that 85% found information on prognosis useful and 79% thought they should have access to the platform as a service.

By providing information on the prevalence of conditions as well as information on the safety and efficacy of a particular medication, it is hoped that this will stimulate further initiatives to generate new analyses for a wider range of prognostic outcomes. Given the rising trends of multimorbidity, especially in younger people, the Informatics Consult may contribute to the creation of a knowledge base generated from real-world datasets to address the current gaps in randomised control trials (arising from the exclusion of patients with comorbid conditions).

Case study 9: Towards a national learning health system for asthma in Scotland

Asthma is a significant cause of ill health and hospitalisation in the UK, costing an estimated £1.1bn and leading to 1,400 deaths every year. Around one in every 14 people in Scotland are currently receiving treatment for it and 89 in every 100,000 people are hospitalised each year due to exacerbations (increases in severity). Timely patient data is key to understanding and preventing exacerbations.

To address this challenge, researchers at the University of Edinburgh are working towards developing a national LHS for asthma that will support clinicians to identify and address modifiable factors that can contribute to exacerbations.

By harnessing routinely collected, anonymised data from the Oxford Royal College of General Practitioners Clinical Informatics Digital Hub (ORCHID), the team created an online dashboard for asthma that gives GPs weekly updates on how their practice compares with their network across several indicators.

Information provided includes comparative data on asthma prevalence, vaccination uptake, smoking rates, hospitalisations and body mass index measurements. This enables GPs to see changes in their practice, compare themselves to other practices and rapidly respond to better support people with asthma, prevent exacerbations and potentially prevent avoidable deaths.

In repurposing routine data to generate knowledge that can then be incorporated into clinical practice in real time, the LHS for asthma is one of the first applications of LHS approaches at a national level outside the US. The project champions innovative approaches to near real-time data visualisation, allowing health care providers to compare care and service quality to evidence-based standards and drive improvement.

While the impact has so far been limited due to the effects of the COVID-19 pandemic and workforce pressures, the researchers are now seeking further funding to develop complementary behavioural, motivational and organisational interventions that can tackle the barriers to using the data to make improvements. They are also going to develop a learning-based prediction model in order to create a personalised risk assessment tool to further support clinicians to predict asthma attacks and reduce asthma morbidity and mortality.

2.3. Harnessing technology

2.3.1. Key issues and opportunities

While not a necessary part of an LHS, technology can clearly play a very important enabling role, for example by:

- enhancing data collection and analysis

- accessing high quality information through electronic health records

- helping with clinical decision making

- supporting the design and implementation of improvements.

Increasing the use of digital health technologies is a priority for the UK’s health and care systems to support both service delivery and improvement. For example, NHS England’s service transformation plans are currently seeking to capitalise on the potential of LHSs to generate and embed knowledge and drive improvements in health and care.

Enhancing data collection and analysis

Digital technologies can support LHSs by enhancing the way health data are collected, for example through devices such as mobile phones, wearables and sensors. These technologies can enable the real-time collection and analysis of health data that may have previously been prohibitively expensive, intrusive or time consuming to collect or understand. For example, the CFHealthHub (case study 3) shows how data gathered through the routine use of Bluetooth-enabled nebulisers helps clinicians to understand how well existing care pathways are supporting people with cystic fibrosis. By integrating technologies into the existing care pathway, this example shows how data can be used to support system learning and improve personalised support for people with long-term health conditions. The use of digital health technologies such as these may also mean that the data collected is of a higher quality when compared with data inputted into a system manually. But it is important to make sure that those using these technologies are comfortable with using them, capable of using them and motivated to do so.,